No 79: August digital and AI roundup

Teacher AI assistants, GPT-5, AI energy use, children's data lives, robot Olympics, bye bye dial-up, try Trickle and much more...

What’s happening

Welcome back to readers old and new! After an August break we’re gearing up for the new term and will be back to normal service next week. But, as we gently ease out of summer, here’s an overview of some of the digital news, views and resources that caught our eye over the holidays.

Our second Parent Zone quick read has been published! Read AI literacy activity ideas for educators to find out why AI literacy in schools calls for a critical, ethical approach which is genuinely cross-curricular, and how to do it.

AI roundup

AI teacher helpers reviewed

Commonsense Media has conducted in-depth evaluations of popular AI teacher assistant platforms, including Google's Gemini in Google Classroom (through Google Workspace for Education), Khanmigo's Teacher Assistant, Curipod, and MagicSchool. The testing examined opportunities and potential for harm across multiple categories, including effectiveness, content accuracy, bias and student safety. It finds they can be “powerful” in terms of increasing productivity and increasing creativity but require experienced educators. Matthew Wemyss reviews Commonsense’s review and emphasises

AI does not know your class or the needs of your students

AI does not know the culture of your school

AI cannot tell if a lesson plan is biased, inaccurate or unhelpful

AI can become an ‘invisible influencer’ and shape what students learn in ways no one notices until it's too late

GPT-5: it does stuff

It feels like a while ago now but at the start of the month GPT-5 was suddenly released and caused a few ructions, to the extent that OpenAI had to restore access to older models for unhappy paying subscribers and users of GPT companions suffered grief at the change in personality of their previously warmer and chattier AI chatbots.

Ethan Mollick had, as always, an excellent overview of the way GPT-5 “just does stuff, often extraordinary stuff, sometimes weird stuff, sometimes very AI stuff, on its own. And that is what makes it so interesting”. GPT-5 is the overall name for a collection of models and also the name of the tool that picks which model would answer your query – a smaller faster one for simple uses or the more powerful Reasoner where needed. This is its power, but also where the problems lay for many people, as he explains in a follow up post:

“if you just wanted to chat, GPT-5 was supposed to use its weaker specialised chat models; if you were trying to solve a math problem, GPT-5 was supposed to send you to its slower, more expensive GPT-5 Thinking model. This would save money and give more people access to the best AIs. But the rollout had issues. This practice wasn’t well explained and the router did not work well at first. The result is that one person using GPT-5 got a very smart answer while another got a bad one.”

As a result, OpenAI introduced some updates, such as being able to choose between ‘auto’, ‘fast’ and ‘reasoning’ models and making the personality ‘warmer’. However, ultimately, with GPT-5, more powerful models are getting cheaper and easier to use. The impact?

“Powerful AI is cheap enough to give away, easy enough that you don't need a manual, and capable enough to outperform humans at a range of intellectual tasks. A flood of opportunities and problems are about to show up in classrooms, courtrooms, and boardrooms around the world. The Mass Intelligence era is what happens when you give a billion people access to an unprecedented set of tools and see what they do with it. We are about to find out what that is like.”

More AI energy transparency – to an extent

Google has shared how much energy Gemini apps use for each query (0.24 watt-hours of electricity, the equivalent of running a standard microwave for about one second). Google also provided average estimates for the water consumption and carbon emissions associated with a text prompt to Gemini. It’s more information than any big tech company has yet provided, and is much needed and very welcome data to help examine the environmental impact of AI tools - but is it enough? Well, not really. It only covers text queries, not image or video. And, says MIT, we don’t know how many queries Gemini is seeing, so we don’t know the product’s total energy impact. Google wouldn’t provide that figure but OpenAI does publicly share its total, and sees 2.5 billion queries to ChatGPT every day, so:

“over the course of a year, that would add up to over 300 gigawatt-hours – the same as powering nearly 30,000 US homes annually. When you put it that way, it starts to sound like a lot of seconds in microwaves.”

AI slowdown in South Korean schools

High hopes for South Korea’s AI textbook initiative in schools have been dashed after teachers and parents became critical of the project, with teachers overwhelmed and sceptical parents petitioning against it. Political opposition and upheaval also played a part.

AI dilemmas for teachers

This is an interesting set of resources from a research team at Monash University, led by Neil Selwyn. It offers three A3 ‘provocation posters’ featuring real-life examples that have cropped up in the team’s research on teachers' experiences of AI, covering dilemmas around student feedback, chatbot use and grading.

Stressed students

A HEPI report on student wellbeing in the AI era in higher education finds that the widespread adoption of AI tools is linked to considerable student stress, with students often fearing they might be unintentionally breaking the rules. There are also concerns that universities are not moving fast enough to provide AI tools, leaving students to work out for themselves how best to use AI tools.

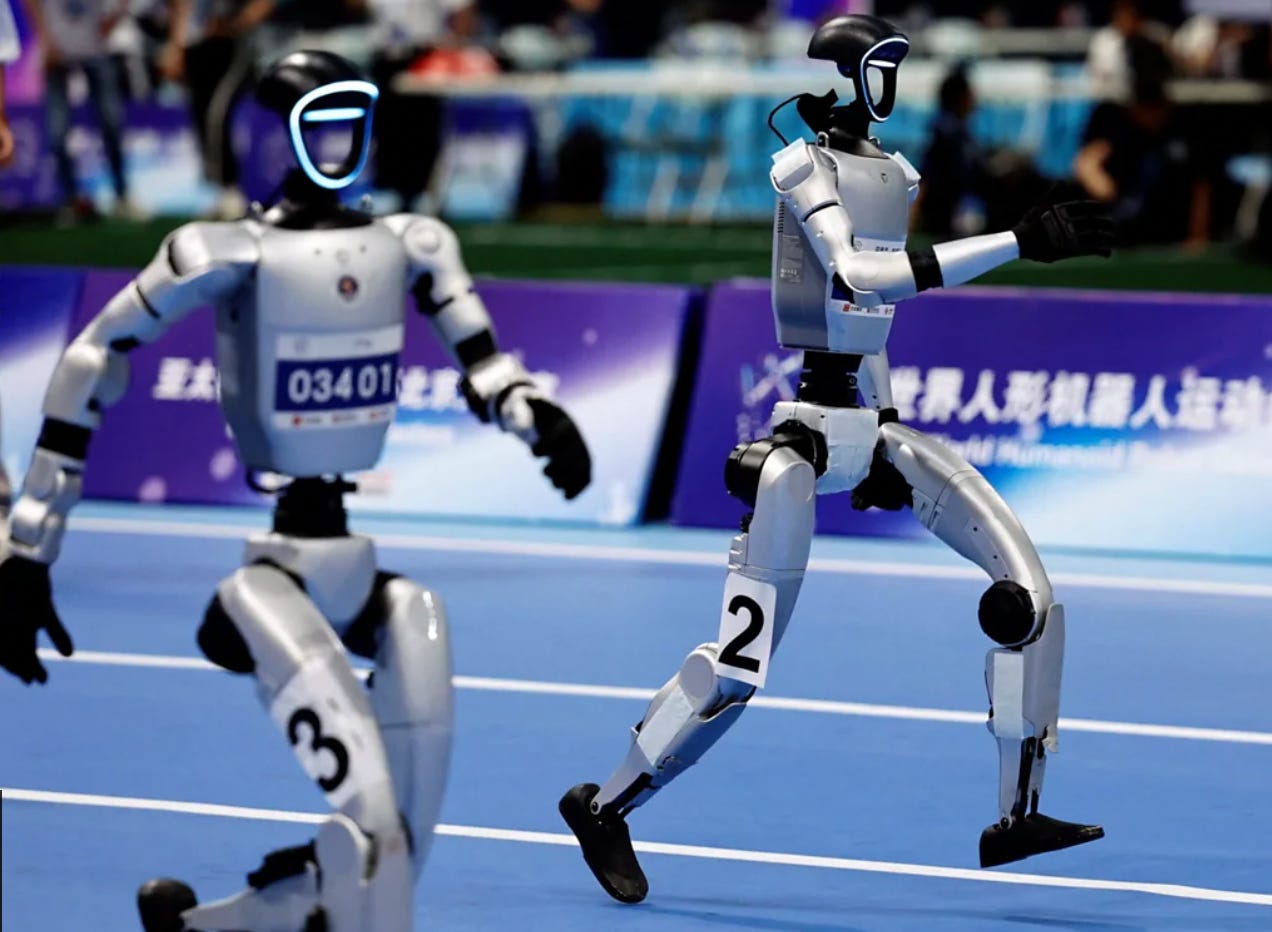

Going for gold

Earlier this month The first World Humanoid Robot Games took place in Beijing, China. Participants from 16 countries including the US, Germany and Japan. Robot athletes competed across a range of events including athletics, football, dance and martial arts. Watch the BBC’s report and the compelling sight of robots falling over.

Also in August:

OpenAI and Anthropic collaborate on safety tests, revealing flaws with each company’s offerings

OpenAI, Google, and Perplexity are striking deals to gather real-world data that can’t be simply scraped from the internet, raising privacy and fairness concerns

Jobs and Skills Australia has modelled the potential impact of AI on the workforce and found that careers in book-keeping, marketing or programming are at risk but jobs in nursing, construction or hospitality are pretty safe

Quick links

Revealing Reality’s research Children’s Data Lives is a longitudinal study tracking the lives of 30 children, aged 8 to 17, through a combination of filmed ethnographic interviews and UX and platform analysis. This second report from the study explores how children's digital lives unfold within environments shaped by specific design features in digital platforms and how these play out across three core areas of design: data agreements, age assurance and algorithms.

Roblox is facing “a string of nightmarish lawsuits”, which depict it as “a feeding ground for predators cloaked in cartoon graphics” reports Politico. It raises questions about how to regulate an online world like Roblox, a platform that falls somewhere between a video game and social network and has 111 million monthly users.

Meanwhile, TikTok is cutting hundreds of UK content moderator jobs, despite the recent UK online safety rules mandating age checks.

Next week UNESCO will hold its annual Digital Learning Week in Paris. This year's theme is "AI and the future of education: Disruptions, dilemmas and directions". But get your skates on! Today – Friday 29 August – is the last day to register.

School staff members' names, addresses, phone numbers, national insurance and passport numbers may have been exposed in a cyberattack, reports Schools Week. The breach occurred at a third-party IT provider for Online SCR, a company that manages background checks for schools.

Computing at School has some tips from the classroom on boosting A-Level Computer Science results.

Neil Selwyn’s new book Digital Degrowth is definitely on our to-read list.

AOL is discontinuing its dial-up internet service after 34 years. Want to relive how a dial-up modem sounded? Listen here

We’re reading, listening…

How OpenAI's ChatGPT Guided a Teen to His Death

The Center For Humane Technology podcast investigates the court case filed against Open AI and Sam Altman by the parents of a 16-year-old boy who took his own life. The lawsuit claims that design features and choices in ChatGPT led predictably to the teenager’s death. The BBC also reports on the case.

Back to school

Wired’s got a back to school special on tech and education. It includes a look at the different ways teachers are using AI in the classroom, more on problems at Roblox, Silicon Valley’s obsession with microschools, and life without social media in Australia

‘There are things that AI does for me that I would now hate to do myself, and hope never to do again’

Casey Newton shares his productivity tools and how he’s using AI. It’s from a journalist’s perspective but likely of wider interest. (h/t to Storythings)

Give it a try

Trickle

Need some retro distraction as the new term kicks in – or maybe a metaphor? Give Trickle a try.

Connected Learning is by Sarah Horrocks and Michelle Pauli